This tutorial will help you write your first Hadoop program.

Prereqs

- You have set up a single-node cluster by following the single-node setup tutorial.

- You have tested your cluster using the grep example described in the Hadoop Quickstart.

- This tutorial will work on Linux boxes and Macs. If you are on Windows you may need to install Python; ActivePython from ActiveState is a fine (and free) choice for Windows. If you are on Windows, be sure to do this tutorial from inside a Cygwin shell.

- We assume that you run commands from inside the Hadoop directory.

What you Will Create

- This tutorial uses the HadoopStreaming API to interact with Hadoop. This API allows you to write code in any language and use a simple text-based record format for the input and output key, value pairs. We will explore other, richer APIs in later tutorials.

- The finished version of this program will fetch the titles of web pages at particular URLs.

Fetch Titles from URLs

First we write a program to fetch titles from one or more web pages in Python:

You can test this yourself. To make the file executable do this: Then execute it like this: Output should look something like this:

This program does not do any error checking; so it will not behave correctly if you give it invalid URLs. Also note that it silently ignores a URL if it cannot find a title.

Self Test

See if you can guess what each line of this short program does, even if you are not familiar with Python. If you are unsure about any part of this program, please ask the TA, we are always happy to talk about code with you!

Hadoop Scaffolding

Next we expand multifetch.py to accept URLs to fetch from standard input (one per line).

You can test the new multifetch.py by feeding it urls separated by newlines, something like this:

Now we must write the reducer. For this example our reducer does not do anything interesting, it just outputs all of the input pairs with no aggregation or transformation. This is a perfectly valid reducer, but for your projects you will probably have to do more.

Again we make it executable:

Your reducer doesn’t actually do anything noticeable to the output, but in general if you want to test your Python mapper and reducer on the command line you can do something like this (here I am just using generic names instead of the specific names we used above, this same technique can be applied with any MapReduce code written in Python): Note that sort is a UNIX command line tool which sorts its input. This is necessary to simulate Hadoop behavior since the Hadoop reduce phase is preceded by the sort phase.

Running on the command line is not a substitute for testing with Hadoop, but it can be helpful as you are writing your code and will give you a good sense of what your code is doing without waiting on the overhead of starting a Hadoop task.

Running the Example with HadoopStreaming

First we must create some input data and put it in the HDFS. Make two or more files named urlX where X is a number. Each file should contain exactly one URL. For example, here we create two files: This creates these two files:

Now we must put these files in the HDFS. Remember the command to put files into HDFS?

We will also use the mkdir command so that the input files are in their own directory. You may be able to come up with a more efficient way to put many such url files into HDFS. This part of the tutorial has been updated: you need to include a full, absolute path as illustrated below if you are running on a real cluster, and this path must be in your NFS-mounted homedir.

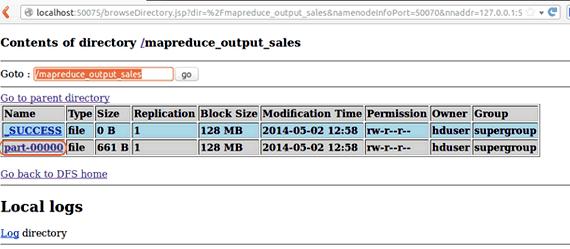

At long last, we can run the command: This should output something like this: And you should be able to view the results like this:

Look at the Job Stats

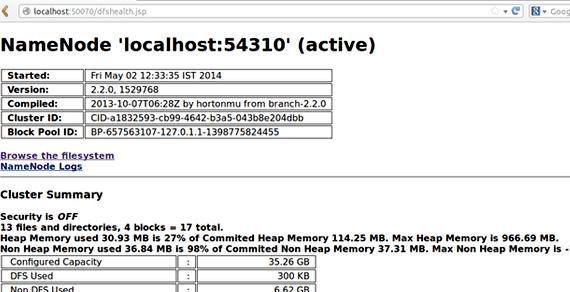

You can look at the job statistics by following these two urls:

You can also use the Map/Reduce administration link to view the status of a job as it runs (but you must refresh the page manually, it does not automatically refresh).

These links have the port numbers from the Quickstart. If you are on a Berry patch machine you should have changed them to your assigned port numbers. The Map/Reduce administration link should have the port you assigned to the mapred.job.tracker.info.port property in conf/hadoop-site.xml. The NameNode status link should have the port you assigned to the dfs.info.port property. If you are on your personal computer then the links should work as-is.

Questions for Further Thought

- Did the multifetch.py run faster with Hadoop than when you just tested it on the command line?

- How might you extend multifetch.py to fetch other properties? Remember that map can output multiple key, value pairs for each input.

What about Java?

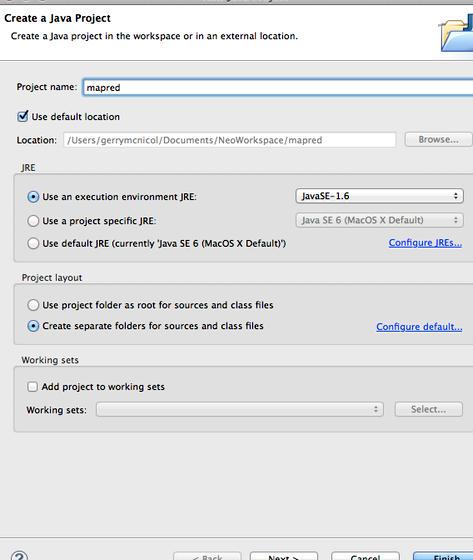

There is a Java version of the multifetch example. Please read it and try it out if you wish you write your Hadoop code in Java.

What’s Next?

2008 Brandeis University &- cs147a Networks and Distributed Computing

Pearson my canadian writing lab

Pearson my canadian writing lab Paragraph writing on my best friend for class 3

Paragraph writing on my best friend for class 3 My daily writing routine messages

My daily writing routine messages Sharpen your business letter writing skills

Sharpen your business letter writing skills Yourdon constantine cohesion in writing

Yourdon constantine cohesion in writing