I created a web application. It’ll operate on a farm of 5 IIS servers. It’s essential that when a session is made having a server within the farm, further HTTP demands within that session are forwarded to that very same server through out the session.

Today I came across F5’s site and discovered “sticky sessions.” Because of the fact that many of my users is going to be mobile (e.g. iPhone), it is possible their Ip may change in the middle of just one session. Which means that the origin IP can’t be employed to identify unique sessions. White-colored papers claim that the F5 LTM device supplies a solution with this, allowing me to make use of content inside the HTTP request itself [or perhaps a cookie] to find out session identity.

To date so great. However I acquired to thinking… That F5 system is just one reason for failure. In addition, let’s say I drastically increase capacity and wish to increase the F5 devices? Establishing a cluster of these is sensible. But despite Googling as well as I’m able to, I can not look for a white-colored paper describing the fundamental concepts of methods a cluster functions “underneath the hood.”

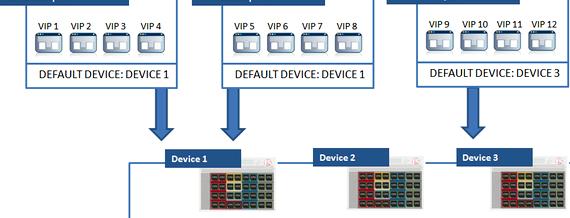

Consider… Let us say I purchase two F5 devices. They share a typical “virtual” IP around the exterior (Internet-facing) interface of my network. An HTTP connection is available in and in some way the 2 devices determine that F5 #1 should answer the phone call. F5 #1 presently has an in-memory map that associates the identity of this session [via cookie, let us say] to internal server #4. Two minutes later that very same customer initiates a brand new HTTP connection included in the same session. The destination “virtual” IP is identical but his source Ip has altered. How on the planet could I be certain that F5#1 will get that connection rather of F5#2?

When the former receives it, we are who is fit because we have an in-memory map to recognize the session. However, if the latter receives it, it will not remember that the session is connected with server #4.

Perform the two F5 devices share information with one another in some way to make the work? Or perhaps is the configuration I am describing simply not an operatingOrtypical method of doing things?

Sorry for that newb questions… these things are brand new in my experience.

requested 12 , 1 ’11 at 2:13

It’s essential that when a session is made having a server within the farm, further HTTP demands within that session are forwarded to that very same server through out the session. What rationale could cause this kind of unorthodox design? Appears in my experience a much better alternative might have the clients download a summary of available servers, and fasten to some specific server. Or learn to maintain and restore session condition between demands. Greg Askew 12 , 1 ’11 at 2:46

Ah, yes, I see that which you mean. The failure aspect is crucial however i guess I had been from a perspective of growing capacity. Let’s say 2 yrs from now my single F5 gets overwhelmed. Wouldn’t I cluster it with a different one to talk about the burden? Therefore, will they share their condition with one another? Maybe I’m while using term cluster incorrectly. Sorry in the event that’s the situation. Thanks. Chad Decker 12 , 1 ’11 at 2:37

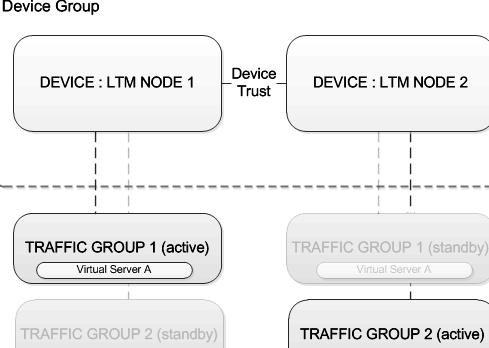

In my opinion in greater finish that you can do active/active clustering by active/active it really means really/passive for several IPs and passive/active for an additional group of IPs or IP.

So at any time, one IP are only participating in just one F5. As the capacity increases, practically, you’d finish up just upgrading your F5 hardware to greater performance box. R D 12 , 1 ’11 at 2:44

@ChadDecker – the word cluster is ambigious, and that’s why you’ll usually think it is known as a HA cluster, or perhaps an Active/Passive cluster, or perhaps an Active/Active cluster. That which you’re searching for is really a cluster employed for scaling out, instead of high availability. Mark Henderson ♦ 12 , 1 ’11 at 3:04

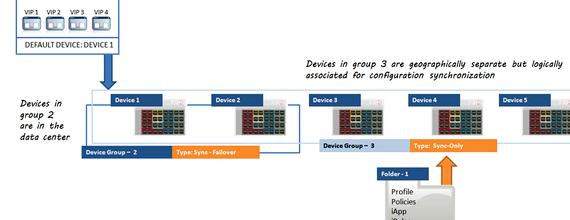

I don’t think that you could truly ‘cluster’ two F5 content switches, though In my opinion they are focusing on the feature however i might be wrong. Clustering load-balancers is a big engineering challenge – how can they share information at layer4 or layer 7, how can they communicate over layer 2 or layer 3 and just how is clustering and knowledge-discussing enabled without having affected performance as load-balancers need to operate at wire-speed basically.

Consider firewalls several years ago, proxy-based firewalls were always standalone-nodes simply because they accomplished it much at layer 7 also it was basically impossible to talk about these details across nodes without killing performance, whereas stateful packet filters only needed to transfer layer 4 information as well as which was an overhead. Load-balancers will typically be deployed with a lot of their configuration, as with your situation with VIPs, serving as an endpoint so the whole TCP session is re-written and also the load-balancer becomes the customer towards the server (i.e. there’s basically two flows which is one good reason why the particular client can’t perform http pipelining direct towards the back-finish server).

With HA, you do not attain the scale out that you would like so you will need to scale-your load-balancer to handle load etc. Vendors like this just like scale-up, it generally (not necessarily as sometimes you are able to enable extra CPU including upgrade) means a brand new, bigger box HA provides resiliency and reliability though clearly. With HA, you typically have command propagation, configuration synchronisation and a few component of session exchange (which may be configured to some degree because this may cause load).

You can look scaling by load-balancing your load-balancers (i.e. LB -> LB -> Web Farm) but that is not great, can introduce latency, is (very) pricey as well as your infrastructure has another reason for failure though I’ve come across it effectively implemented.

You should use something similar to VRRP, which is sort of a quasi-cluster almost Within this implementation you might have two teams of HA pairs of load-balancers before your internet farm, give them a call HA1 and HA2. With VRRP, you can create two VIPs, one being survive HA1 (vip1) and yet another being survive HA2 (vip2) because of the greater VRRP priority configuration. Both vip1 and vip2 can failover to another HA pair through VRRP, either instantly (according to monitors etc) or by hand by decreasing the VRRP priority.

Most vendors have KB articles around the above configurations. I have faith that there’s one vendor that has true clustering within their product but I’ll allow you to google for your.

All load-balancers have many forms of persistence, that you simply affect the rear-finish server association. Popular forms today are cookie and hash (in line with the 4-tuple and a couple of other activities). Once the load-balancer functions being an endpoint as with your scenario, when the TCP connection is fully established, it’ll produce a protocol control buffer basically, that will contain info on the bond (basically some-tuple and also of other activities again). There are 2 such buffers, one representing both sides from the connection which buffer resides in memory around the load-balancer before the session is ended, when they are removed to release the memory to be used again.

clarified May 10 ’12 at 14:45

You essentially have two options here around the F5 platform, with respect to the kind of Very important personel you are configuring. On the Layer 4 Very important personel (effectively a NAT) you are able to configure connection mirroring, which enables the TCP session not to be interrupted throughout an HA event. This is not feasible for a Layer 7 Very important personel – there’s way too much condition to “supportInch towards the Standby in realtime – however, you can mirror cookie persistence records, that will make certain that whenever the HA failover, once the client reconnects, it will likely be redirected towards the same backend server.

I haven’t got first-hands understanding, but I have faith that the Netscaler has similar abilities.

That stated, a plan that’s completely determined by this kind of persistence will have problems, particularly if you have backend servers which are coming interior and exterior the Very important personel rotation on any kind of consistent basis. I’d encourage you investigate standing a shared cache (memcached is ideal for this) that any person in your server pool can query to validate the cookie with an incoming request. It’s simpler than you believe 🙂

clarified August 24 ’12 at 3:19

The Way To Go

2016 Stack Exchange, Corporation

Best resume writing services in atlanta ga day spa

Best resume writing services in atlanta ga day spa Dissertation service public et union europeenne en

Dissertation service public et union europeenne en Service communautaire de la planchette automatic writing

Service communautaire de la planchette automatic writing The best essay writing service uk

The best essay writing service uk Droit administratif service public dissertation abstract

Droit administratif service public dissertation abstract